Depth-sensing tech from Qualcomm challenges Apple

/This story first appeared on PC Perspective.

New camera and image processing technology from Qualcomm promises to change how Android smartphones and VR headsets see the world. Depth sensing isn’t new to smartphones and tablets, first seeing significant use in Google’s Project Tango and Intel’s RealSense Technology. Tango uses a laser-based implementation that measures roundtrip times bouncing off surfaces but requires a bulky lens on the rear of the device. Early Tango phones like the Lenovo Phab 2 were hindered by large size requirements as a result. Intel RealSense was featured in the Dell Venue 8 7000 tablet and allowed the camera to adjust depth of field and focal points after the image had been capturing. It used a pair of cameras and calculated depth based on parallax mapping between them, just as the human eye works.

Modern devices like the iPhone 7 Plus and Samsung Galaxy S8 offer faux-depth perception for features like portrait photo modes. In reality, they only emulate the ability to sense depth by use different range camera lenses and don’t provide true depth mapping capability.

New technology and integration programs at Qualcomm are working to improve the performance, capability, and availability of true depth sensing technology for Android-based smartphones and VR headsets this year. For the entry-level market devices that today do not have the ability to utilize depth sensing, a passive camera module was built to utilize parallax displacement and estimate depth. This requires two matching camera lenses and a known offset distance between them. Even low-cost phones will have the ability to integrate image quality enhancements like blurred bokeh and basic mixed or augmented reality, bringing the technology to a mass market.

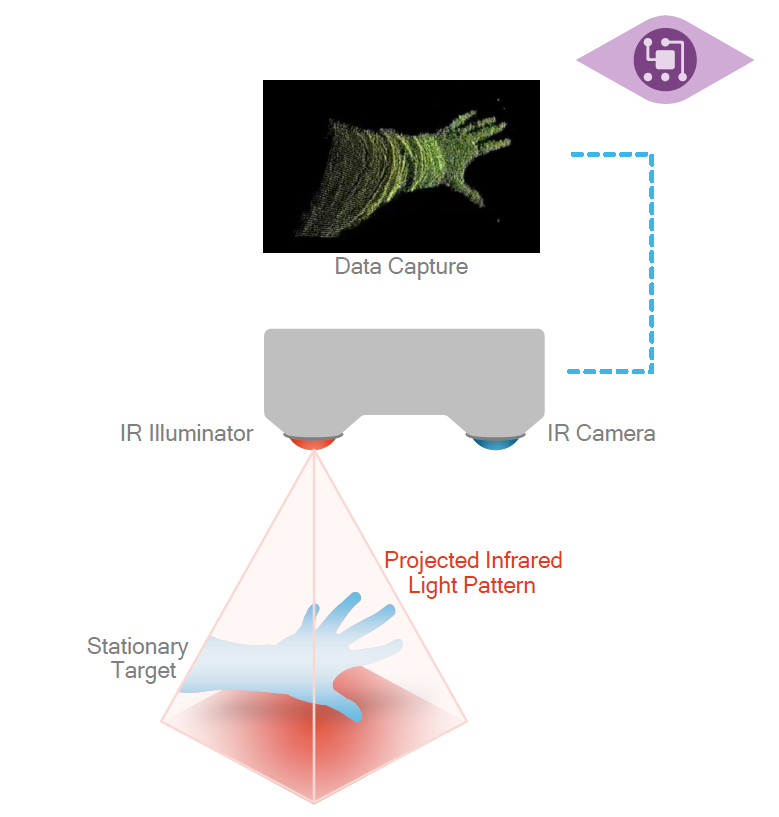

The more advanced integration of the Qualcomm Spectra module program provides active depth sensing with a set of three devices. A standard high resolution camera is paired with both an infrared projector and an infrared camera that are utilized for high resolution depth map creation. The technology projects an infrared image with a preset pattern into the world, invisible to the human eye, but picked up by the IR camera. The Spectra image processor on the Qualcomm Snapdragon mobile platform then measures the displacement and deformations of the pattern to determine the depth and location of the items in the physical world. This is done in real-time, at high frame rates and high resolution to create a 10,000 data point “cloud” in a virtual 3D space.

For consumers this means more advanced security and advanced features on mobile devices. Face detection and mapping that combines the standard camera input along with the IR depth sensing combination will allow for incredibly accurate and secure authentication. Qualcomm claims that the accuracy level is high enough to prevent photos of faces and even 3D models of faces from unlocking the device thanks to interactions of human skin and eyes with IR light.

3D reconstruction of physical objects will also be possible with active depth sensing, allowing gamers to bring real items into virtual worlds. It also allows designers to accurately measure physical spaces that they can look through in full 3D. Virtual reality and augmented reality will benefit from the increased accuracy of its localization and mapping algorithms, improving the “inside-out” tracking capabilities of dedicated headsets and slot-in devices like Samsung’s Gear VR and Google Daydream.

Though the second generation Qualcomm Spectra ISP (image sensor processor) is required for the complex compute tasks that depth sensing will create, the module program the company has created is more important for the adoption, speed of integration, and cost of the technology to potential customers. By working with companies like Sony for image sensors and integration on modules, Qualcomm has pre-qualified sets of hardware and provides calibration profiles for its licensees to select from and build into upcoming devices. These arrangements allow for Qualcomm to remove some of the burden from handset vendors, lowering development time and costs, getting depth sensing and advanced photo capabilities to Android phones faster.

It has been all but confirmed that the upcoming Apple iPhone 8 will have face detection integrated on it and the company’s push into AR (augmented reality) with iOS 11 points to a bet on depth sensing technology as well. Though Apple is letting developers build applications and integrations with the current A9 and A10 processors, it will likely build its own co-processor to handle the compute workloads that come from active depth sensing and offset power consumption concerns of using a general purpose processor.

Early leaks indicate that Apple will focus its face detection technology on a similar path to the one Qualcomm has paved: security and convenience. By using depth-based facial recognition for both login and security (as a Touch ID replacement), users will have an alternative to fingerprints. That is good news for a device that is having problems moving to a fingerprint sensor design that uses the entire screen.

It now looks like a race to integration for Android and Apple smartphones and devices. The Qualcomm Spectra ISP and module program will accelerate adoption in the large and financially variable Android market, giving handset vendors another reason to consider Qualcomm chipsets over competing solutions. Apple benefits from control over the entire hardware, software, and supply chain, and will see immediate adoption of the capabilities when the next-generation iPhone makes its debut.